repo

string | pull_number

int64 | instance_id

string | issue_numbers

list | base_commit

string | patch

string | test_patch

string | problem_statement

string | hints_text

string | created_at

timestamp[s] | language

string | label

string |

|---|---|---|---|---|---|---|---|---|---|---|---|

getlogbook/logbook

| 183

|

getlogbook__logbook-183

|

[

"94"

] |

1d999a784d0d8f5f7423f25c684cc1100843ccc5

|

diff --git a/logbook/handlers.py b/logbook/handlers.py

--- a/logbook/handlers.py

+++ b/logbook/handlers.py

@@ -20,6 +20,7 @@

except ImportError:

from sha import new as sha1

import traceback

+import collections

from datetime import datetime, timedelta

from collections import deque

from textwrap import dedent

@@ -1014,14 +1015,42 @@ class MailHandler(Handler, StringFormatterHandlerMixin,

The default timedelta is 60 seconds (one minute).

- The mail handler is sending mails in a blocking manner. If you are not

+ The mail handler sends mails in a blocking manner. If you are not

using some centralized system for logging these messages (with the help

of ZeroMQ or others) and the logging system slows you down you can

wrap the handler in a :class:`logbook.queues.ThreadedWrapperHandler`

that will then send the mails in a background thread.

+ `server_addr` can be a tuple of host and port, or just a string containing

+ the host to use the default port (25, or 465 if connecting securely.)

+

+ `credentials` can be a tuple or dictionary of arguments that will be passed

+ to :py:meth:`smtplib.SMTP.login`.

+

+ `secure` can be a tuple, dictionary, or boolean. As a boolean, this will

+ simply enable or disable a secure connection. The tuple is unpacked as

+ parameters `keyfile`, `certfile`. As a dictionary, `secure` should contain

+ those keys. For backwards compatibility, ``secure=()`` will enable a secure

+ connection. If `starttls` is enabled (default), these parameters will be

+ passed to :py:meth:`smtplib.SMTP.starttls`, otherwise

+ :py:class:`smtplib.SMTP_SSL`.

+

+

.. versionchanged:: 0.3

The handler supports the batching system now.

+

+ .. versionadded:: 1.0

+ `starttls` parameter added to allow disabling STARTTLS for SSL

+ connections.

+

+ .. versionchanged:: 1.0

+ If `server_addr` is a string, the default port will be used.

+

+ .. versionchanged:: 1.0

+ `credentials` parameter can now be a dictionary of keyword arguments.

+

+ .. versionchanged:: 1.0

+ `secure` can now be a dictionary or boolean in addition to to a tuple.

"""

default_format_string = MAIL_FORMAT_STRING

default_related_format_string = MAIL_RELATED_FORMAT_STRING

@@ -1039,7 +1068,7 @@ def __init__(self, from_addr, recipients, subject=None,

server_addr=None, credentials=None, secure=None,

record_limit=None, record_delta=None, level=NOTSET,

format_string=None, related_format_string=None,

- filter=None, bubble=False):

+ filter=None, bubble=False, starttls=True):

Handler.__init__(self, level, filter, bubble)

StringFormatterHandlerMixin.__init__(self, format_string)

LimitingHandlerMixin.__init__(self, record_limit, record_delta)

@@ -1054,6 +1083,7 @@ def __init__(self, from_addr, recipients, subject=None,

if related_format_string is None:

related_format_string = self.default_related_format_string

self.related_format_string = related_format_string

+ self.starttls = starttls

def _get_related_format_string(self):

if isinstance(self.related_formatter, StringFormatter):

@@ -1148,20 +1178,63 @@ def get_connection(self):

"""Returns an SMTP connection. By default it reconnects for

each sent mail.

"""

- from smtplib import SMTP, SMTP_PORT, SMTP_SSL_PORT

+ from smtplib import SMTP, SMTP_SSL, SMTP_PORT, SMTP_SSL_PORT

if self.server_addr is None:

host = '127.0.0.1'

port = self.secure and SMTP_SSL_PORT or SMTP_PORT

else:

- host, port = self.server_addr

- con = SMTP()

- con.connect(host, port)

+ try:

+ host, port = self.server_addr

+ except ValueError:

+ # If server_addr is a string, the tuple unpacking will raise

+ # ValueError, and we can use the default port.

+ host = self.server_addr

+ port = self.secure and SMTP_SSL_PORT or SMTP_PORT

+

+ # Previously, self.secure was passed as con.starttls(*self.secure). This

+ # meant that starttls couldn't be used without a keyfile and certfile

+ # unless an empty tuple was passed. See issue #94.

+ #

+ # The changes below allow passing:

+ # - secure=True for secure connection without checking identity.

+ # - dictionary with keys 'keyfile' and 'certfile'.

+ # - tuple to be unpacked to variables keyfile and certfile.

+ # - secure=() equivalent to secure=True for backwards compatibility.

+ # - secure=False equivalent to secure=None to disable.

+ if isinstance(self.secure, collections.Mapping):

+ keyfile = self.secure.get('keyfile', None)

+ certfile = self.secure.get('certfile', None)

+ elif isinstance(self.secure, collections.Iterable):

+ # Allow empty tuple for backwards compatibility

+ if len(self.secure) == 0:

+ keyfile = certfile = None

+ else:

+ keyfile, certfile = self.secure

+ else:

+ keyfile = certfile = None

+

+ # Allow starttls to be disabled by passing starttls=False.

+ if not self.starttls and self.secure:

+ con = SMTP_SSL(host, port, keyfile=keyfile, certfile=certfile)

+ else:

+ con = SMTP(host, port)

+

if self.credentials is not None:

- if self.secure is not None:

+ secure = self.secure

+ if self.starttls and secure is not None and secure is not False:

con.ehlo()

- con.starttls(*self.secure)

+ con.starttls(keyfile=keyfile, certfile=certfile)

con.ehlo()

- con.login(*self.credentials)

+

+ # Allow credentials to be a tuple or dict.

+ if isinstance(self.credentials, collections.Mapping):

+ credentials_args = ()

+ credentials_kwargs = self.credentials

+ else:

+ credentials_args = self.credentials

+ credentials_kwargs = dict()

+

+ con.login(*credentials_args, **credentials_kwargs)

return con

def close_connection(self, con):

@@ -1175,7 +1248,7 @@ def close_connection(self, con):

pass

def deliver(self, msg, recipients):

- """Delivers the given message to a list of recpients."""

+ """Delivers the given message to a list of recipients."""

con = self.get_connection()

try:

con.sendmail(self.from_addr, recipients, msg.as_string())

@@ -1227,7 +1300,7 @@ class GMailHandler(MailHandler):

def __init__(self, account_id, password, recipients, **kw):

super(GMailHandler, self).__init__(

- account_id, recipients, secure=(),

+ account_id, recipients, secure=True,

server_addr=("smtp.gmail.com", 587),

credentials=(account_id, password), **kw)

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -158,6 +158,10 @@ def status_msgs(*msgs):

extras_require = dict()

extras_require['test'] = set(['pytest', 'pytest-cov'])

+

+if sys.version_info[:2] < (3, 3):

+ extras_require['test'] |= set(['mock'])

+

extras_require['dev'] = set(['cython']) | extras_require['test']

extras_require['execnet'] = set(['execnet>=1.0.9'])

|

diff --git a/tests/test_mail_handler.py b/tests/test_mail_handler.py

--- a/tests/test_mail_handler.py

+++ b/tests/test_mail_handler.py

@@ -7,6 +7,11 @@

from .utils import capturing_stderr_context, make_fake_mail_handler

+try:

+ from unittest.mock import Mock, call, patch

+except ImportError:

+ from mock import Mock, call, patch

+

__file_without_pyc__ = __file__

if __file_without_pyc__.endswith('.pyc'):

__file_without_pyc__ = __file_without_pyc__[:-1]

@@ -104,3 +109,126 @@ def test_group_handler_mail_combo(activation_strategy, logger):

assert len(related) == 2

assert re.search('Message type:\s+WARNING', related[0])

assert re.search('Message type:\s+DEBUG', related[1])

+

+

+def test_mail_handler_arguments():

+ with patch('smtplib.SMTP', autospec=True) as mock_smtp:

+

+ # Test the mail handler with supported arguments before changes to

+ # secure, credentials, and starttls

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr=('server.example.com', 465),

+ credentials=('username', 'password'),

+ secure=('keyfile', 'certfile'))

+

+ mail_handler.get_connection()

+

+ assert mock_smtp.call_args == call('server.example.com', 465)

+ assert mock_smtp.method_calls[1] == call().starttls(

+ keyfile='keyfile', certfile='certfile')

+ assert mock_smtp.method_calls[3] == call().login('username', 'password')

+

+ # Test secure=()

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr=('server.example.com', 465),

+ credentials=('username', 'password'),

+ secure=())

+

+ mail_handler.get_connection()

+

+ assert mock_smtp.call_args == call('server.example.com', 465)

+ assert mock_smtp.method_calls[5] == call().starttls(

+ certfile=None, keyfile=None)

+ assert mock_smtp.method_calls[7] == call().login('username', 'password')

+

+ # Test implicit port with string server_addr, dictionary credentials,

+ # dictionary secure.

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr='server.example.com',

+ credentials={'user': 'username', 'password': 'password'},

+ secure={'certfile': 'certfile2', 'keyfile': 'keyfile2'})

+

+ mail_handler.get_connection()

+

+ assert mock_smtp.call_args == call('server.example.com', 465)

+ assert mock_smtp.method_calls[9] == call().starttls(

+ certfile='certfile2', keyfile='keyfile2')

+ assert mock_smtp.method_calls[11] == call().login(

+ user='username', password='password')

+

+ # Test secure=True

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr=('server.example.com', 465),

+ credentials=('username', 'password'),

+ secure=True)

+

+ mail_handler.get_connection()

+

+ assert mock_smtp.call_args == call('server.example.com', 465)

+ assert mock_smtp.method_calls[13] == call().starttls(

+ certfile=None, keyfile=None)

+ assert mock_smtp.method_calls[15] == call().login('username', 'password')

+ assert len(mock_smtp.method_calls) == 16

+

+ # Test secure=False

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr=('server.example.com', 465),

+ credentials=('username', 'password'),

+ secure=False)

+

+ mail_handler.get_connection()

+

+ # starttls not called because we check len of method_calls before and

+ # after this test.

+ assert mock_smtp.call_args == call('server.example.com', 465)

+ assert mock_smtp.method_calls[16] == call().login('username', 'password')

+ assert len(mock_smtp.method_calls) == 17

+

+ with patch('smtplib.SMTP_SSL', autospec=True) as mock_smtp_ssl:

+ # Test starttls=False

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr='server.example.com',

+ credentials={'user': 'username', 'password': 'password'},

+ secure={'certfile': 'certfile', 'keyfile': 'keyfile'},

+ starttls=False)

+

+ mail_handler.get_connection()

+

+ assert mock_smtp_ssl.call_args == call(

+ 'server.example.com', 465, keyfile='keyfile', certfile='certfile')

+ assert mock_smtp_ssl.method_calls[0] == call().login(

+ user='username', password='password')

+

+ # Test starttls=False with secure=True

+ mail_handler = logbook.MailHandler(

+ from_addr='from@example.com',

+ recipients='to@example.com',

+ server_addr='server.example.com',

+ credentials={'user': 'username', 'password': 'password'},

+ secure=True,

+ starttls=False)

+

+ mail_handler.get_connection()

+

+ assert mock_smtp_ssl.call_args == call(

+ 'server.example.com', 465, keyfile=None, certfile=None)

+ assert mock_smtp_ssl.method_calls[1] == call().login(

+ user='username', password='password')

+

+

+

+

+

+

|

SMTP Handler STARTTLS

Due to the lack of documentation on this handler it took a little digging to work out how to get it to work...

One thing that confused me was the "secure" argument. Python SMTPLib starttls() accepts two optional values: a keyfile and certfile - but these are only required for _checking_ the identity. If neither are specified then SMTPLib will still try establish an encrypted connection but without checking the identity. If you do not specify an argument to Logbook, it will not attempt to establish an encrypted connection at all.

So, if you want a tls connection to the SMTP server but don't care about checking the identity you can do `secure = []` which will pass the `if self.secure is not None`, however if you do `secure = True` you will get an error because you cannot unpack a boolean! (as logbook populates the arguments using: `conn.starttls(*self.secure)`).

It'd help if the documentation explained the arguments for the mail handlers.

|

You're right. A simple solution is to use `secure = ()`, but I agree it has to be better documented.

| 2015-12-03T01:44:29

|

python

|

Easy

|

rigetti/pyquil

| 399

|

rigetti__pyquil-399

|

[

"398",

"398"

] |

d6a0e29b2b1a506a48977a9d8432e70ec699af34

|

diff --git a/pyquil/parameters.py b/pyquil/parameters.py

--- a/pyquil/parameters.py

+++ b/pyquil/parameters.py

@@ -31,9 +31,11 @@ def format_parameter(element):

out += repr(r)

if i == 1:

- out += 'i'

+ assert np.isclose(r, 0, atol=1e-14)

+ out = 'i'

elif i == -1:

- out += '-i'

+ assert np.isclose(r, 0, atol=1e-14)

+ out = '-i'

elif i < 0:

out += repr(i) + 'i'

else:

|

diff --git a/pyquil/tests/test_parameters.py b/pyquil/tests/test_parameters.py

--- a/pyquil/tests/test_parameters.py

+++ b/pyquil/tests/test_parameters.py

@@ -14,6 +14,8 @@ def test_format_parameter():

(1j, 'i'),

(0 + 1j, 'i'),

(-1j, '-i'),

+ (1e-15 + 1j, 'i'),

+ (1e-15 - 1j, '-i')

]

for test_case in test_cases:

|

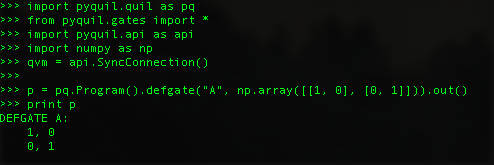

DEFGATEs are not correct

There is a problem with DEFGATEs that has manifested itself in the `phase_estimation` module of Grove (brought to our attention here: https://github.com/rigetticomputing/grove/issues/145).

I have traced the problem to commit d309ac11dabd9ea9c7ffa57dd26e68b5e7129aa9

Each of the below test cases should deterministically return the input phase, for both `phase_estimation` and `estimate_gradient`. With this commit, result is not correct and nondeterministic for phase=3/4.

```

import numpy as np

import scipy.linalg

import pyquil.api as api

from grove.alpha.phaseestimation.phase_estimation import phase_estimation

from grove.alpha.jordan_gradient.gradient_utils import *

from grove.alpha.jordan_gradient.jordan_gradient import estimate_gradient

qvm = api.QVMConnection()

trials = 1

precision = 8

for phase in [1/2, 1/4, 3/4, 1/8, 1/16, 1/32]:

Z = np.asarray([[1.0, 0.0], [0.0, -1.0]])

Rz = scipy.linalg.expm(-1j*Z*np.pi*phase)

p = phase_estimation(Rz, precision)

out = qvm.run(p, list(range(precision)), trials)

wf = qvm.wavefunction(p)

bf_estimate = measurements_to_bf(out)

bf_explicit = '{0:.16f}'.format(bf_estimate)

deci_estimate = binary_to_real(bf_explicit)

print('phase: ', phase)

print('pe', deci_estimate)

print('jg', estimate_gradient(phase, precision, n_measurements=trials, cxn=qvm))

print('\n')

```

DEFGATEs are not correct

There is a problem with DEFGATEs that has manifested itself in the `phase_estimation` module of Grove (brought to our attention here: https://github.com/rigetticomputing/grove/issues/145).

I have traced the problem to commit d309ac11dabd9ea9c7ffa57dd26e68b5e7129aa9

Each of the below test cases should deterministically return the input phase, for both `phase_estimation` and `estimate_gradient`. With this commit, result is not correct and nondeterministic for phase=3/4.

```

import numpy as np

import scipy.linalg

import pyquil.api as api

from grove.alpha.phaseestimation.phase_estimation import phase_estimation

from grove.alpha.jordan_gradient.gradient_utils import *

from grove.alpha.jordan_gradient.jordan_gradient import estimate_gradient

qvm = api.QVMConnection()

trials = 1

precision = 8

for phase in [1/2, 1/4, 3/4, 1/8, 1/16, 1/32]:

Z = np.asarray([[1.0, 0.0], [0.0, -1.0]])

Rz = scipy.linalg.expm(-1j*Z*np.pi*phase)

p = phase_estimation(Rz, precision)

out = qvm.run(p, list(range(precision)), trials)

wf = qvm.wavefunction(p)

bf_estimate = measurements_to_bf(out)

bf_explicit = '{0:.16f}'.format(bf_estimate)

deci_estimate = binary_to_real(bf_explicit)

print('phase: ', phase)

print('pe', deci_estimate)

print('jg', estimate_gradient(phase, precision, n_measurements=trials, cxn=qvm))

print('\n')

```

| 2018-04-20T17:39:41

|

python

|

Hard

|

|

marcelotduarte/cx_Freeze

| 2,220

|

marcelotduarte__cx_Freeze-2220

|

[

"2210"

] |

639141207611f0edca554978f66b1ed7df3d8cdf

|

diff --git a/cx_Freeze/winversioninfo.py b/cx_Freeze/winversioninfo.py

--- a/cx_Freeze/winversioninfo.py

+++ b/cx_Freeze/winversioninfo.py

@@ -12,16 +12,16 @@

__all__ = ["Version", "VersionInfo"]

+# types

+CHAR = "c"

+WCHAR = "ss"

+WORD = "=H"

+DWORD = "=L"

+

# constants

RT_VERSION = 16

ID_VERSION = 1

-# types

-CHAR = "c"

-DWORD = "L"

-WCHAR = "H"

-WORD = "H"

-

VS_FFI_SIGNATURE = 0xFEEF04BD

VS_FFI_STRUCVERSION = 0x00010000

VS_FFI_FILEFLAGSMASK = 0x0000003F

@@ -32,6 +32,8 @@

KEY_STRING_TABLE = "040904E4"

KEY_VAR_FILE_INFO = "VarFileInfo"

+COMMENTS_MAX_LEN = (64 - 2) * 1024 // calcsize(WCHAR)

+

# To disable the experimental feature in Windows:

# set CX_FREEZE_STAMP=pywin32

# pip install -U pywin32

@@ -82,7 +84,7 @@ def to_buffer(self):

data = data.to_buffer()

elif isinstance(data, str):

data = data.encode("utf-16le")

- elif isinstance(fmt, str):

+ elif isinstance(data, int):

data = pack(fmt, data)

buffer += data

return buffer

@@ -142,7 +144,9 @@ def __init__(

value_len = value.wLength

fields.append(("Value", type(value)))

elif isinstance(value, Structure):

- value_len = calcsize("".join([f[1] for f in value._fields]))

+ value_len = 0

+ for field in value._fields:

+ value_len += calcsize(field[1])

value_type = 0

fields.append(("Value", type(value)))

@@ -199,7 +203,8 @@ def __init__(

self.valid_version: Version = valid_version

self.internal_name: str | None = internal_name

self.original_filename: str | None = original_filename

- self.comments: str | None = comments

+ # comments length must be limited to 31kb

+ self.comments: str = comments[:COMMENTS_MAX_LEN] if comments else None

self.company: str | None = company

self.description: str | None = description

self.copyright: str | None = copyright

@@ -221,6 +226,8 @@ def stamp(self, path: str | Path) -> None:

version_stamp = import_module("win32verstamp").stamp

except ImportError as exc:

raise RuntimeError("install pywin32 extension first") from exc

+ # comments length must be limited to 15kb (uses WORD='h')

+ self.comments = (self.comments or "")[: COMMENTS_MAX_LEN // 2]

version_stamp(os.fspath(path), self)

return

@@ -263,17 +270,18 @@ def version_info(self, path: Path) -> String:

elif len(self.valid_version.release) >= 4:

build = self.valid_version.release[3]

+ # use the data in the order shown in 'pepper'

data = {

- "Comments": self.comments or "",

- "CompanyName": self.company or "",

"FileDescription": self.description or "",

"FileVersion": self.version,

"InternalName": self.internal_name or path.name,

+ "CompanyName": self.company or "",

"LegalCopyright": self.copyright or "",

"LegalTrademarks": self.trademarks or "",

"OriginalFilename": self.original_filename or path.name,

"ProductName": self.product or "",

"ProductVersion": str(self.valid_version),

+ "Comments": self.comments or "",

}

is_dll = self.dll

if is_dll is None:

@@ -311,6 +319,7 @@ def version_info(self, path: Path) -> String:

string_version_info = String(KEY_VERSION_INFO, fixed_file_info)

string_version_info.children(string_file_info)

string_version_info.children(var_file_info)

+

return string_version_info

|

diff --git a/tests/test_winversioninfo.py b/tests/test_winversioninfo.py

--- a/tests/test_winversioninfo.py

+++ b/tests/test_winversioninfo.py

@@ -9,7 +9,12 @@

import pytest

from generate_samples import create_package, run_command

-from cx_Freeze.winversioninfo import Version, VersionInfo, main_test

+from cx_Freeze.winversioninfo import (

+ COMMENTS_MAX_LEN,

+ Version,

+ VersionInfo,

+ main_test,

+)

PLATFORM = get_platform()

PYTHON_VERSION = get_python_version()

@@ -97,6 +102,14 @@ def test___init__with_kwargs(self):

assert version_instance.debug is input_debug

assert version_instance.verbose is input_verbose

+ def test_big_comment(self):

+ """Tests a big comment value for the VersionInfo class."""

+ input_version = "9.9.9.9"

+ input_comments = "TestComment" + "=" * COMMENTS_MAX_LEN

+ version_instance = VersionInfo(input_version, comments=input_comments)

+ assert version_instance.version == "9.9.9.9"

+ assert version_instance.comments == input_comments[:COMMENTS_MAX_LEN]

+

@pytest.mark.parametrize(

("input_version", "version"),

[

|

Cannot freeze python-3.12 code on Windows 11

**Describe the bug**

Cannot freeze python 3.12 code on Windows 11 Pro amd64 using cx_Freeze 6.16.aplha versions, last I tried is 20.

This was working fine three weeks ago, but suddenly it started to fail like this:

```

copying C:\Users\jmarcet\scoop\apps\openjdk17\17.0.2-8\bin\api-ms-win-core-console-l1-2-0.dll -> C:\Users\jmarcet\src\movistar-u7d\build\exe.win-amd64-3.12\api-ms-win-core-console-l1-2-0.dll

copying C:\Users\jmarcet\scoop\apps\python312\3.12.1\python312.dll -> C:\Users\jmarcet\src\movistar-u7d\build\exe.win-amd64-3.12\python312.dll

WARNING: cannot find 'api-ms-win-core-path-l1-1-0.dll'

copying C:\Users\jmarcet\scoop\persist\python312\Lib\site-packages\cx_Freeze\bases\console-cpython-312-win_amd64.exe -> C:\Users\jmarcet\src\movistar-u7d\build\exe.win-amd64-3.12\movistar_epg.exe

copying C:\Users\jmarcet\scoop\persist\python312\Lib\site-packages\cx_Freeze\initscripts\frozen_application_license.txt -> C:\Users\jmarcet\src\movistar-u7d\build\exe.win-amd64-3.12\frozen_application_license.txt

data=72092

Traceback (most recent call last):

File "C:\Users\jmarcet\src\movistar-u7d\setup.py", line 25, in <module>

setup(

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\__init__.py", line 68, in setup

setuptools.setup(**attrs)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\__init__.py", line 103, in setup

return distutils.core.setup(**attrs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\core.py", line 185, in setup

return run_commands(dist)

^^^^^^^^^^^^^^^^^^

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\core.py", line 201, in run_commands

dist.run_commands()

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\dist.py", line 969, in run_commands

self.run_command(cmd)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\dist.py", line 963, in run_command

super().run_command(command)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\dist.py", line 988, in run_command

cmd_obj.run()

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\command\build.py", line 131, in run

self.run_command(cmd_name)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\cmd.py", line 318, in run_command

self.distribution.run_command(command)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\dist.py", line 963, in run_command

super().run_command(command)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\setuptools\_distutils\dist.py", line 988, in run_command

cmd_obj.run()

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\command\build_exe.py", line 284, in run

freezer.freeze()

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\freezer.py", line 731, in freeze

self._freeze_executable(executable)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\freezer.py", line 323, in _freeze_executable

self._add_resources(exe)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\freezer.py", line 794, in _add_resources

version.stamp(target_path)

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\winversioninfo.py", line 240, in stamp

handle, RT_VERSION, ID_VERSION, string_version_info.to_buffer()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\jmarcet\scoop\apps\python312\current\Lib\site-packages\cx_Freeze\winversioninfo.py", line 96, in to_buffer

data = pack(fmt, data)

^^^^^^^^^^^^^^^

struct.error: 'H' format requires 0 <= number <= 65535

```

**To Reproduce**

```

git clone -b next https://github.com/jmarcet/movistar-u7d

cd movistar-u7d

pip install --force --no-cache --pre --upgrade --extra-index-url https://marcelotduarte.github.io/packages/cx_Freeze

pip install -r requirements-win.txt

python .\setup.py build

```

**Expected behavior**

Frozen artifacts saved under `build` dir

**Desktop (please complete the following information):**

- Platform information: Windows 11 Pro

- OS architecture: amd64

- cx_Freeze version: [cx_Freeze-6.16.0.dev20-cp312-cp312-win_amd64.whl](https://marcelotduarte.github.io/packages/cx-freeze/cx_Freeze-6.16.0.dev20-cp312-cp312-win_amd64.whl)

- Python version: 3.12.1

**Additional context**

I had initially reported it on #2153

|

Please check the version installed of cx_Freeze and setuptools with `pip list`.

Successfully installed aiofiles-23.2.1 aiohttp-3.9.1 aiosignal-1.3.1 asyncio-3.4.3 asyncio_dgram-2.1.2 attrs-23.2.0 cx-Logging-3.1.0 **cx_Freeze-6.16.0.dev9** defusedxml-0.8.0rc2 filelock-3.13.1 frozenlist-1.4.1 httptools-0.6.1 idna-3.6 lief-0.14.0 multidict-6.0.4 prometheus-client-0.7.1 psutil-5.9.8 pywin32-306 sanic-22.6.2 sanic-prometheus-0.2.1 sanic-routing-22.3.0 **setuptools-68.2.2** tomli-2.0.1 ujson-5.9.0 websockets-10.4 wheel-0.41.2 wmi-1.5.1 xmltodict-0.13.0 yarl-1.9.4

You should update your requirements-win.txt, insert the first line:

--extra-index-url https://marcelotduarte.github.io/packages/

OR install the new development release after the requirements. Also, update setuptools.

@marcelotduarte I still have the same issue

```

> pip list

Package Version

------------------ ------------

aiofiles 23.2.1

aiohttp 3.9.1

aiosignal 1.3.1

astroid 3.0.2

asttokens 2.4.1

asyncio 3.4.3

asyncio-dgram 2.1.2

attrs 23.2.0

bandit 1.7.6

certifi 2023.11.17

charset-normalizer 3.3.2

colorama 0.4.6

cx-Freeze 6.16.0.dev23

cx_Logging 3.1.0

decorator 5.1.1

defusedxml 0.7.1

dill 0.3.7

executing 2.0.1

filelock 3.13.1

frozenlist 1.4.1

gitdb 4.0.11

GitPython 3.1.41

httpie 3.2.2

httptools 0.6.1

idna 3.6

ipython 8.20.0

isort 5.13.2

jedi 0.19.1

lief 0.15.0

markdown-it-py 3.0.0

matplotlib-inline 0.1.6

mccabe 0.7.0

mdurl 0.1.2

multidict 6.0.4

parso 0.8.3

pbr 6.0.0

pip 23.2.1

platformdirs 4.1.0

prometheus-client 0.7.1

prompt-toolkit 3.0.43

psutil 5.9.8

pure-eval 0.2.2

Pygments 2.17.2

pylint 3.0.3

pynvim 0.5.0

PySocks 1.7.1

pywin32 306

PyYAML 6.0.1

requests 2.31.0

requests-toolbelt 1.0.0

rich 13.7.0

ruff 0.1.11

sanic 22.6.2

sanic-prometheus 0.2.1

sanic-routing 22.3.0

setuptools 69.0.3

six 1.16.0

smmap 5.0.1

stack-data 0.6.3

stevedore 5.1.0

tomli 2.0.1

tomlkit 0.12.3

traitlets 5.14.1

ujson 5.9.0

urllib3 2.1.0

wcwidth 0.2.13

websockets 10.4

wheel 0.42.0

WMI 1.5.1

xmltodict 0.13.0

yarl 1.9.4

```

| 2024-01-25T06:06:14

|

python

|

Easy

|

pytest-dev/pytest-django

| 1,108

|

pytest-dev__pytest-django-1108

|

[

"1106"

] |

6cf63b65e86870abf68ae1f376398429e35864e7

|

diff --git a/pytest_django/plugin.py b/pytest_django/plugin.py

--- a/pytest_django/plugin.py

+++ b/pytest_django/plugin.py

@@ -362,8 +362,15 @@ def _get_option_with_source(

@pytest.hookimpl(trylast=True)

def pytest_configure(config: pytest.Config) -> None:

- # Allow Django settings to be configured in a user pytest_configure call,

- # but make sure we call django.setup()

+ if config.getoption("version", 0) > 0 or config.getoption("help", False):

+ return

+

+ # Normally Django is set up in `pytest_load_initial_conftests`, but we also

+ # allow users to not set DJANGO_SETTINGS_MODULE/`--ds` and instead

+ # configure the Django settings in a `pytest_configure` hookimpl using e.g.

+ # `settings.configure(...)`. In this case, the `_setup_django` call in

+ # `pytest_load_initial_conftests` only partially initializes Django, and

+ # it's fully initialized here.

_setup_django(config)

@@ -470,8 +477,7 @@ def get_order_number(test: pytest.Item) -> int:

@pytest.fixture(autouse=True, scope="session")

def django_test_environment(request: pytest.FixtureRequest) -> Generator[None, None, None]:

- """

- Ensure that Django is loaded and has its testing environment setup.

+ """Setup Django's test environment for the testing session.

XXX It is a little dodgy that this is an autouse fixture. Perhaps

an email fixture should be requested in order to be able to

@@ -481,7 +487,6 @@ def django_test_environment(request: pytest.FixtureRequest) -> Generator[None, N

we need to follow this model.

"""

if django_settings_is_configured():

- _setup_django(request.config)

from django.test.utils import setup_test_environment, teardown_test_environment

debug_ini = request.config.getini("django_debug_mode")

|

diff --git a/tests/test_manage_py_scan.py b/tests/test_manage_py_scan.py

--- a/tests/test_manage_py_scan.py

+++ b/tests/test_manage_py_scan.py

@@ -144,6 +144,37 @@ def test_django_project_found_invalid_settings_version(

result.stdout.fnmatch_lines(["*usage:*"])

+@pytest.mark.django_project(project_root="django_project_root", create_manage_py=True)

+def test_django_project_late_settings_version(

+ django_pytester: DjangoPytester,

+ monkeypatch: pytest.MonkeyPatch,

+) -> None:

+ """Late configuration should not cause an error with --help or --version."""

+ monkeypatch.delenv("DJANGO_SETTINGS_MODULE")

+ django_pytester.makepyfile(

+ t="WAT = 1",

+ )

+ django_pytester.makeconftest(

+ """

+ import os

+

+ def pytest_configure():

+ os.environ.setdefault('DJANGO_SETTINGS_MODULE', 't')

+ from django.conf import settings

+ settings.WAT

+ """

+ )

+

+ result = django_pytester.runpytest_subprocess("django_project_root", "--version", "--version")

+ assert result.ret == 0

+

+ result.stdout.fnmatch_lines(["*This is pytest version*"])

+

+ result = django_pytester.runpytest_subprocess("django_project_root", "--help")

+ assert result.ret == 0

+ result.stdout.fnmatch_lines(["*usage:*"])

+

+

@pytest.mark.django_project(project_root="django_project_root", create_manage_py=True)

def test_runs_without_error_on_long_args(django_pytester: DjangoPytester) -> None:

django_pytester.create_test_module(

|

`pytest --help` fails in a partially configured app

having a difficult time narrowing down a minimal example -- the repo involved is https://github.com/getsentry/sentry

I have figured out _why_ it is happening and the stacktrace for it:

<summary>full stacktrace with error

<details>

```console

$ pytest --help

/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/trio/_core/_multierror.py:511: RuntimeWarning: You seem to already have a custom sys.excepthook handler installed. I'll skip installing Trio's custom handler, but this means MultiErrors will not show full tracebacks.

warnings.warn(

Traceback (most recent call last):

File "/Users/asottile/workspace/sentry/.venv/bin/pytest", line 8, in <module>

sys.exit(console_main())

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/_pytest/config/__init__.py", line 190, in console_main

code = main()

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/_pytest/config/__init__.py", line 167, in main

ret: Union[ExitCode, int] = config.hook.pytest_cmdline_main(

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/hooks.py", line 286, in __call__

return self._hookexec(self, self.get_hookimpls(), kwargs)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/manager.py", line 93, in _hookexec

return self._inner_hookexec(hook, methods, kwargs)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/manager.py", line 84, in <lambda>

self._inner_hookexec = lambda hook, methods, kwargs: hook.multicall(

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 208, in _multicall

return outcome.get_result()

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 80, in get_result

raise ex[1].with_traceback(ex[2])

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 187, in _multicall

res = hook_impl.function(*args)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/_pytest/helpconfig.py", line 152, in pytest_cmdline_main

config._do_configure()

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/_pytest/config/__init__.py", line 1037, in _do_configure

self.hook.pytest_configure.call_historic(kwargs=dict(config=self))

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/hooks.py", line 308, in call_historic

res = self._hookexec(self, self.get_hookimpls(), kwargs)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/manager.py", line 93, in _hookexec

return self._inner_hookexec(hook, methods, kwargs)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/manager.py", line 84, in <lambda>

self._inner_hookexec = lambda hook, methods, kwargs: hook.multicall(

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 208, in _multicall

return outcome.get_result()

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 80, in get_result

raise ex[1].with_traceback(ex[2])

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pluggy/callers.py", line 187, in _multicall

res = hook_impl.function(*args)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pytest_django/plugin.py", line 367, in pytest_configure

_setup_django(config)

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/pytest_django/plugin.py", line 238, in _setup_django

blocking_manager = config.stash[blocking_manager_key]

File "/Users/asottile/workspace/sentry/.venv/lib/python3.10/site-packages/_pytest/stash.py", line 80, in __getitem__

return cast(T, self._storage[key])

KeyError: <_pytest.stash.StashKey object at 0x1066ab520>

```

</details>

</summary>

basically what's happening is the setup is skipped here: https://github.com/pytest-dev/pytest-django/blob/6cf63b65e86870abf68ae1f376398429e35864e7/pytest_django/plugin.py#L300-L301

normally it sets the thing that's being looked up here: https://github.com/pytest-dev/pytest-django/blob/6cf63b65e86870abf68ae1f376398429e35864e7/pytest_django/plugin.py#L358

which then fails to lookup here: https://github.com/pytest-dev/pytest-django/blob/6cf63b65e86870abf68ae1f376398429e35864e7/pytest_django/plugin.py#L238

something about sentry's `tests/conftest.py` initializes enough of django that `pytest-django` takes over. but since the setup has been skipped it fails to set up properly. I suspect that #238 is playing poorly with something.

of note this worked before I upgraded `pytest-django` (I was previously on 4.4.0 and upgraded to 4.7.0 to get django 4.x support)

will try and narrow down a smaller reproduction...

|

That’s a fun one! Hopefully using `config.stash.get()` calls and acting only on non-`None` values will be enough to fix the issue...

here's a minimal case:

```console

==> t.py <==

WAT = 1

==> tests/__init__.py <==

==> tests/conftest.py <==

import os

def pytest_configure():

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 't')

from django.conf import settings

settings.WAT

```

| 2024-01-29T14:22:15

|

python

|

Hard

|

marcelotduarte/cx_Freeze

| 2,597

|

marcelotduarte__cx_Freeze-2597

|

[

"2596"

] |

df2c8aef8f92da535a1bb657706ca4496b1c3352

|

diff --git a/cx_Freeze/finder.py b/cx_Freeze/finder.py

--- a/cx_Freeze/finder.py

+++ b/cx_Freeze/finder.py

@@ -537,7 +537,10 @@ def _replace_package_in_code(module: Module) -> CodeType:

# Insert a bytecode to set __package__ as module.parent.name

codes = [LOAD_CONST, pkg_const_index, STORE_NAME, pkg_name_index]

codestring = bytes(codes) + code.co_code

- consts.append(module.parent.name)

+ if module.file.stem == "__init__":

+ consts.append(module.name)

+ else:

+ consts.append(module.parent.name)

code = code_object_replace(

code, co_code=codestring, co_consts=consts

)

diff --git a/cx_Freeze/hooks/scipy.py b/cx_Freeze/hooks/scipy.py

--- a/cx_Freeze/hooks/scipy.py

+++ b/cx_Freeze/hooks/scipy.py

@@ -18,12 +18,18 @@

def load_scipy(finder: ModuleFinder, module: Module) -> None:

"""The scipy package.

- Supported pypi and conda-forge versions (lasted tested version is 1.11.2).

+ Supported pypi and conda-forge versions (lasted tested version is 1.14.1).

"""

source_dir = module.file.parent.parent / f"{module.name}.libs"

if source_dir.exists(): # scipy >= 1.9.2 (windows)

- finder.include_files(source_dir, f"lib/{source_dir.name}")

- replace_delvewheel_patch(module)

+ if IS_WINDOWS:

+ finder.include_files(source_dir, f"lib/{source_dir.name}")

+ replace_delvewheel_patch(module)

+ else:

+ target_dir = f"lib/{source_dir.name}"

+ for source in source_dir.iterdir():

+ finder.lib_files[source] = f"{target_dir}/{source.name}"

+

finder.include_package("scipy.integrate")

finder.include_package("scipy._lib")

finder.include_package("scipy.misc")

|

diff --git a/samples/scipy/test_scipy.py b/samples/scipy/test_scipy.py

--- a/samples/scipy/test_scipy.py

+++ b/samples/scipy/test_scipy.py

@@ -1,8 +1,6 @@

"""A simple script to demonstrate scipy."""

-from scipy.stats import norm

+from scipy.spatial.transform import Rotation

if __name__ == "__main__":

- print(

- "bounds of distribution lower: {}, upper: {}".format(*norm.support())

- )

+ print(Rotation.from_euler("XYZ", [10, 10, 10], degrees=True).as_matrix())

|

cx-Freeze - No module named 'scipy._lib.array_api_compat._aliases'

**Prerequisite**

This was previously reported in the closed issue #2544, where no action was taken. I include a minimal script that produces the problem for me.

**Describe the bug**

When running the compiled executable, i get the following error:

```

PS C:\dat\projects\gazeMapper\cxFreeze\build\exe.win-amd64-3.10> .\test.exe

Traceback (most recent call last):

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\Lib\site-packages\cx_Freeze\initscripts\__startup__.py", line 141, in run

module_init.run(f"__main__{name}")

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\Lib\site-packages\cx_Freeze\initscripts\console.py", line 25, in run

exec(code, main_globals)

File "C:\dat\projects\gazeMapper\cxFreeze\test.py", line 1, in <module>

from scipy.spatial.transform import Rotation

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\spatial\__init__.py", line 110, in <module>

from ._kdtree import *

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\spatial\_kdtree.py", line 4, in <module>

from ._ckdtree import cKDTree, cKDTreeNode

File "_ckdtree.pyx", line 11, in init scipy.spatial._ckdtree

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\sparse\__init__.py", line 293, in <module>

from ._base import *

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\sparse\_base.py", line 5, in <module>

from ._sputils import (asmatrix, check_reshape_kwargs, check_shape,

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\sparse\_sputils.py", line 10, in <module>

from scipy._lib._util import np_long, np_ulong

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\_lib\_util.py", line 18, in <module>

from scipy._lib._array_api import array_namespace, is_numpy, size as xp_size

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\_lib\_array_api.py", line 21, in <module>

from scipy._lib.array_api_compat import (

File "C:\dat\projects\gazeMapper\cxFreeze\.venv\lib\site-packages\scipy\_lib\array_api_compat\numpy\__init__.py", line 16, in <module>

__import__(__package__ + '.linalg')

ModuleNotFoundError: No module named 'scipy._lib.array_api_compat._aliases'

```

**To Reproduce**

Two files:

test.py

```python

from scipy.spatial.transform import Rotation

print(Rotation.from_euler('XYZ', [10, 10, 10], degrees=True).as_matrix())

```

setup.py:

```python

import cx_Freeze

import pathlib

import sys

import site

path = pathlib.Path(__file__).absolute().parent

def get_include_files():

# don't know if this is a bad idea, it certainly didn't help

files = []

# scipy dlls

for d in site.getsitepackages():

d=pathlib.Path(d)/'scipy'/'.libs'

if d.is_dir():

for f in d.iterdir():

if f.is_file() and f.suffix=='' or f.suffix in ['.dll']:

files.append((f,pathlib.Path('lib')/f.name))

return files

build_options = {

"build_exe": {

"optimize": 1,

"packages": [

'numpy','scipy'

],

"excludes":["tkinter"],

"zip_include_packages": "*",

"zip_exclude_packages": [],

"silent_level": 1,

"include_msvcr": True

}

}

if sys.platform.startswith("win"):

build_options["build_exe"]["include_files"] = get_include_files()

cx_Freeze.setup(

name="test",

version="0.0.1",

description="test",

executables=[

cx_Freeze.Executable(

script=path / "test.py",

target_name="test"

)

],

options=build_options,

py_modules=[]

)

```

**Expected behavior**

exe runs

**Desktop (please complete the following information):**

- Windows 11 Enterprise

- amd64

- cx_Freeze version 7.2.2

- Python version 3.10

- Numpy 2.1.1

- Scipy 1.14.1

**Additional context**

at `\.venv\Lib\site-packages\scipy\_lib\array_api_compat` there is no `_aliases.py`, only `__init__.py` with the following content:

```python

__version__ = '1.5.1'

from .common import * # noqa: F401, F403

```

`_aliases.py` does exist at `\.venv\Lib\site-packages\scipy\_lib\array_api_compat\common`

Both files are packed into library.zip (whole scipy tree is)

|

Changing the config to `"zip_exclude_packages": ['scipy']`, things work. I assume it should work just fine/the same from the zip file. This will be my workaround for now

| 2024-10-02T02:42:26

|

python

|

Easy

|

rigetti/pyquil

| 1,149

|

rigetti__pyquil-1149

|

[

"980"

] |

07db509c5293df2b4624ca6ac409e4fce2666ea1

|

diff --git a/pyquil/device/_isa.py b/pyquil/device/_isa.py

--- a/pyquil/device/_isa.py

+++ b/pyquil/device/_isa.py

@@ -13,8 +13,8 @@

# See the License for the specific language governing permissions and

# limitations under the License.

##############################################################################

-from collections import namedtuple

-from typing import Union

+import sys

+from typing import Any, Dict, List, Optional, Sequence, Tuple, Union

import networkx as nx

import numpy as np

@@ -22,35 +22,64 @@

from pyquil.quilatom import Parameter, unpack_qubit

from pyquil.quilbase import Gate

-THETA = Parameter("theta")

-"Used as the symbolic parameter in RZ, CPHASE gates."

+if sys.version_info < (3, 7):

+ from pyquil.external.dataclasses import dataclass

+else:

+ from dataclasses import dataclass

DEFAULT_QUBIT_TYPE = "Xhalves"

DEFAULT_EDGE_TYPE = "CZ"

+THETA = Parameter("theta")

+"Used as the symbolic parameter in RZ, CPHASE gates."

+

-Qubit = namedtuple("Qubit", ["id", "type", "dead", "gates"])

-Edge = namedtuple("Edge", ["targets", "type", "dead", "gates"])

-_ISA = namedtuple("_ISA", ["qubits", "edges"])

+@dataclass

+class MeasureInfo:

+ operator: Optional[str] = None

+ qubit: Optional[int] = None

+ target: Optional[Union[int, str]] = None

+ duration: Optional[float] = None

+ fidelity: Optional[float] = None

-MeasureInfo = namedtuple("MeasureInfo", ["operator", "qubit", "target", "duration", "fidelity"])

-GateInfo = namedtuple("GateInfo", ["operator", "parameters", "arguments", "duration", "fidelity"])

-# make Qubit and Edge arguments optional

-Qubit.__new__.__defaults__ = (None,) * len(Qubit._fields)

-Edge.__new__.__defaults__ = (None,) * len(Edge._fields)

-MeasureInfo.__new__.__defaults__ = (None,) * len(MeasureInfo._fields)

-GateInfo.__new__.__defaults__ = (None,) * len(GateInfo._fields)

+@dataclass

+class GateInfo:

+ operator: Optional[str] = None

+ parameters: Optional[Sequence[Union[str, float]]] = None

+ arguments: Optional[Sequence[Union[str, float]]] = None

+ duration: Optional[float] = None

+ fidelity: Optional[float] = None

-class ISA(_ISA):

+@dataclass

+class Qubit:

+ id: int

+ type: Optional[str] = None

+ dead: Optional[bool] = None

+ gates: Optional[Sequence[Union[GateInfo, MeasureInfo]]] = None

+

+

+@dataclass

+class Edge:

+ targets: Tuple[int, ...]

+ type: Optional[str] = None

+ dead: Optional[bool] = None

+ gates: Optional[Sequence[GateInfo]] = None

+

+

+@dataclass

+class ISA:

"""

Basic Instruction Set Architecture specification.

- :ivar Sequence[Qubit] qubits: The qubits associated with the ISA.

- :ivar Sequence[Edge] edges: The multi-qubit gates.

+ :ivar qubits: The qubits associated with the ISA.

+ :ivar edges: The multi-qubit gates.

"""

- def to_dict(self):

+ qubits: Sequence[Qubit]

+ edges: Sequence[Edge]

+

+ def to_dict(self) -> Dict[str, Any]:

"""

Create a JSON-serializable representation of the ISA.

@@ -80,19 +109,17 @@ def to_dict(self):

}

:return: A dictionary representation of self.

- :rtype: Dict[str, Any]

"""

- def _maybe_configure(o, t):

- # type: (Union[Qubit,Edge], str) -> dict

+ def _maybe_configure(o: Union[Qubit, Edge], t: str) -> Dict[str, Any]:

"""

Exclude default values from generated dictionary.

- :param Union[Qubit,Edge] o: The object to serialize

- :param str t: The default value for ``o.type``.

+ :param o: The object to serialize

+ :param t: The default value for ``o.type``.

:return: d

"""

- d = {}

+ d: Dict[str, Any] = {}

if o.gates is not None:

d["gates"] = [

{

@@ -127,13 +154,12 @@ def _maybe_configure(o, t):

}

@staticmethod

- def from_dict(d):

+ def from_dict(d: Dict[str, Any]) -> "ISA":

"""

Re-create the ISA from a dictionary representation.

- :param Dict[str,Any] d: The dictionary representation.

+ :param d: The dictionary representation.

:return: The restored ISA.

- :rtype: ISA

"""

return ISA(

qubits=sorted(

@@ -150,7 +176,7 @@ def from_dict(d):

edges=sorted(

[

Edge(

- targets=[int(q) for q in eid.split("-")],

+ targets=tuple(int(q) for q in eid.split("-")),

type=e.get("type", DEFAULT_EDGE_TYPE),

dead=e.get("dead", False),

)

@@ -161,13 +187,12 @@ def from_dict(d):

)

-def gates_in_isa(isa):

+def gates_in_isa(isa: ISA) -> List[Gate]:

"""

Generate the full gateset associated with an ISA.

- :param ISA isa: The instruction set architecture for a QPU.

+ :param isa: The instruction set architecture for a QPU.

:return: A sequence of Gate objects encapsulating all gates compatible with the ISA.

- :rtype: Sequence[Gate]

"""

gates = []

for q in isa.qubits:

@@ -211,6 +236,7 @@ def gates_in_isa(isa):

gates.append(Gate("XY", [THETA], targets))

gates.append(Gate("XY", [THETA], targets[::-1]))

continue

+ assert e.type is not None

if "WILDCARD" in e.type:

gates.append(Gate("_", "_", targets))

gates.append(Gate("_", "_", targets[::-1]))

@@ -220,7 +246,7 @@ def gates_in_isa(isa):

return gates

-def isa_from_graph(graph: nx.Graph, oneq_type="Xhalves", twoq_type="CZ") -> ISA:

+def isa_from_graph(graph: nx.Graph, oneq_type: str = "Xhalves", twoq_type: str = "CZ") -> ISA:

"""

Generate an ISA object from a NetworkX graph.

@@ -230,7 +256,7 @@ def isa_from_graph(graph: nx.Graph, oneq_type="Xhalves", twoq_type="CZ") -> ISA:

"""

all_qubits = list(range(max(graph.nodes) + 1))

qubits = [Qubit(i, type=oneq_type, dead=i not in graph.nodes) for i in all_qubits]

- edges = [Edge(sorted((a, b)), type=twoq_type, dead=False) for a, b in graph.edges]

+ edges = [Edge(tuple(sorted((a, b))), type=twoq_type, dead=False) for a, b in graph.edges]

return ISA(qubits, edges)

diff --git a/pyquil/device/_main.py b/pyquil/device/_main.py

--- a/pyquil/device/_main.py

+++ b/pyquil/device/_main.py

@@ -15,7 +15,7 @@

##############################################################################

import warnings

from abc import ABC, abstractmethod

-from typing import List, Tuple

+from typing import Any, Dict, List, Optional, Tuple, Union

import networkx as nx

import numpy as np

@@ -42,7 +42,7 @@

class AbstractDevice(ABC):

@abstractmethod

- def qubits(self):

+ def qubits(self) -> List[int]:

"""

A sorted list of qubits in the device topology.

"""

@@ -54,7 +54,7 @@ def qubit_topology(self) -> nx.Graph:

"""

@abstractmethod

- def get_isa(self, oneq_type="Xhalves", twoq_type="CZ") -> ISA:

+ def get_isa(self, oneq_type: str = "Xhalves", twoq_type: str = "CZ") -> ISA:

"""

Construct an ISA suitable for targeting by compilation.

@@ -65,7 +65,7 @@ def get_isa(self, oneq_type="Xhalves", twoq_type="CZ") -> ISA:

"""

@abstractmethod

- def get_specs(self) -> Specs:

+ def get_specs(self) -> Optional[Specs]:

"""

Construct a Specs object required by compilation

"""

@@ -86,7 +86,7 @@ class Device(AbstractDevice):

:ivar NoiseModel noise_model: The noise model for the device.

"""

- def __init__(self, name, raw):

+ def __init__(self, name: str, raw: Dict[str, Any]):

"""

:param name: name of the device

:param raw: raw JSON response from the server with additional information about this device.

@@ -102,23 +102,25 @@ def __init__(self, name, raw):

)

@property

- def isa(self):

+ def isa(self) -> Optional[ISA]:

warnings.warn("Accessing the static ISA is deprecated. Use `get_isa`", DeprecationWarning)

return self._isa

- def qubits(self):

+ def qubits(self) -> List[int]:

+ assert self._isa is not None

return sorted(q.id for q in self._isa.qubits if not q.dead)

def qubit_topology(self) -> nx.Graph:

"""

The connectivity of qubits in this device given as a NetworkX graph.

"""

+ assert self._isa is not None

return isa_to_graph(self._isa)

- def get_specs(self):

+ def get_specs(self) -> Optional[Specs]:

return self.specs

- def get_isa(self, oneq_type=None, twoq_type=None) -> ISA:

+ def get_isa(self, oneq_type: Optional[str] = None, twoq_type: Optional[str] = None) -> ISA:

"""

Construct an ISA suitable for targeting by compilation.

@@ -130,7 +132,7 @@ def get_isa(self, oneq_type=None, twoq_type=None) -> ISA:

"make an ISA with custom gate types, you'll have to do it by hand."

)

- def safely_get(attr, index, default):

+ def safely_get(attr: str, index: Union[int, Tuple[int, ...]], default: Any) -> Any:

if self.specs is None:

return default

@@ -144,8 +146,8 @@ def safely_get(attr, index, default):

else:

return default

- def qubit_type_to_gates(q):

- gates = [

+ def qubit_type_to_gates(q: Qubit) -> List[Union[GateInfo, MeasureInfo]]:

+ gates: List[Union[GateInfo, MeasureInfo]] = [

MeasureInfo(

operator="MEASURE",

qubit=q.id,

@@ -200,9 +202,9 @@ def qubit_type_to_gates(q):

]

return gates

- def edge_type_to_gates(e):

- gates = []

- if e is None or "CZ" in e.type:

+ def edge_type_to_gates(e: Edge) -> List[GateInfo]:

+ gates: List[GateInfo] = []

+ if e is None or isinstance(e.type, str) and "CZ" in e.type:

gates += [

GateInfo(

operator="CZ",

@@ -212,7 +214,7 @@ def edge_type_to_gates(e):

fidelity=safely_get("fCZs", tuple(e.targets), DEFAULT_CZ_FIDELITY),

)

]

- if e is not None and "ISWAP" in e.type:

+ if e is None or isinstance(e.type, str) and "ISWAP" in e.type:

gates += [

GateInfo(

operator="ISWAP",

@@ -222,7 +224,7 @@ def edge_type_to_gates(e):

fidelity=safely_get("fISWAPs", tuple(e.targets), DEFAULT_ISWAP_FIDELITY),

)

]

- if e is not None and "CPHASE" in e.type:

+ if e is None or isinstance(e.type, str) and "CPHASE" in e.type:

gates += [

GateInfo(

operator="CPHASE",

@@ -232,7 +234,7 @@ def edge_type_to_gates(e):

fidelity=safely_get("fCPHASEs", tuple(e.targets), DEFAULT_CPHASE_FIDELITY),

)

]

- if e is not None and "XY" in e.type:

+ if e is None or isinstance(e.type, str) and "XY" in e.type:

gates += [

GateInfo(

operator="XY",

@@ -242,7 +244,7 @@ def edge_type_to_gates(e):

fidelity=safely_get("fXYs", tuple(e.targets), DEFAULT_XY_FIDELITY),

)

]

- if e is not None and "WILDCARD" in e.type:

+ if e is None or isinstance(e.type, str) and "WILDCARD" in e.type:

gates += [

GateInfo(

operator="_",

@@ -254,6 +256,7 @@ def edge_type_to_gates(e):

]

return gates

+ assert self._isa is not None

qubits = [

Qubit(id=q.id, type=None, dead=q.dead, gates=qubit_type_to_gates(q))

for q in self._isa.qubits

@@ -264,10 +267,10 @@ def edge_type_to_gates(e):

]

return ISA(qubits, edges)

- def __str__(self):

+ def __str__(self) -> str:

return "<Device {}>".format(self.name)

- def __repr__(self):

+ def __repr__(self) -> str:

return str(self)

@@ -284,17 +287,17 @@ class NxDevice(AbstractDevice):

def __init__(self, topology: nx.Graph) -> None:

self.topology = topology

- def qubit_topology(self):

+ def qubit_topology(self) -> nx.Graph:

return self.topology

- def get_isa(self, oneq_type="Xhalves", twoq_type="CZ"):

+ def get_isa(self, oneq_type: str = "Xhalves", twoq_type: str = "CZ") -> ISA:

return isa_from_graph(self.topology, oneq_type=oneq_type, twoq_type=twoq_type)

- def get_specs(self):

+ def get_specs(self) -> Specs:

return specs_from_graph(self.topology)

def qubits(self) -> List[int]:

return sorted(self.topology.nodes)

- def edges(self) -> List[Tuple[int, int]]:

- return sorted(tuple(sorted(pair)) for pair in self.topology.edges) # type: ignore

+ def edges(self) -> List[Tuple[Any, ...]]:

+ return sorted(tuple(sorted(pair)) for pair in self.topology.edges)

diff --git a/pyquil/device/_specs.py b/pyquil/device/_specs.py

--- a/pyquil/device/_specs.py

+++ b/pyquil/device/_specs.py

@@ -13,104 +13,105 @@

# See the License for the specific language governing permissions and

# limitations under the License.

##############################################################################

+import sys

import warnings

-from collections import namedtuple

+from typing import Any, Dict, Optional, Sequence, Tuple

import networkx as nx

-QubitSpecs = namedtuple(

- "_QubitSpecs",

- [

- "id",

- "fRO",

- "f1QRB",

- "f1QRB_std_err",

- "f1Q_simultaneous_RB",

- "f1Q_simultaneous_RB_std_err",

- "T1",

- "T2",

- "fActiveReset",

- ],

-)

-EdgeSpecs = namedtuple(

- "_QubitQubitSpecs",

- [

- "targets",

- "fBellState",

- "fCZ",

- "fCZ_std_err",

- "fCPHASE",

- "fCPHASE_std_err",

- "fXY",

- "fXY_std_err",

- "fISWAP",

- "fISWAP_std_err",

- ],

-)

-_Specs = namedtuple("_Specs", ["qubits_specs", "edges_specs"])

-

-

-class Specs(_Specs):

+if sys.version_info < (3, 7):

+ from pyquil.external.dataclasses import dataclass

+else:

+ from dataclasses import dataclass

+

+

+@dataclass

+class QubitSpecs:

+ id: int

+ fRO: Optional[float]

+ f1QRB: Optional[float]

+ f1QRB_std_err: Optional[float]

+ f1Q_simultaneous_RB: Optional[float]

+ f1Q_simultaneous_RB_std_err: Optional[float]

+ T1: Optional[float]

+ T2: Optional[float]

+ fActiveReset: Optional[float]

+

+

+@dataclass

+class EdgeSpecs:

+ targets: Tuple[int, ...]

+ fBellState: Optional[float]

+ fCZ: Optional[float]

+ fCZ_std_err: Optional[float]

+ fCPHASE: Optional[float]

+ fCPHASE_std_err: Optional[float]

+ fXY: Optional[float]

+ fXY_std_err: Optional[float]

+ fISWAP: Optional[float]

+ fISWAP_std_err: Optional[float]

+

+

+@dataclass

+class Specs:

"""

Basic specifications for the device, such as gate fidelities and coherence times.

- :ivar List[QubitSpecs] qubits_specs: The specs associated with individual qubits.

- :ivar List[EdgesSpecs] edges_specs: The specs associated with edges, or qubit-qubit pairs.

+ :ivar qubits_specs: The specs associated with individual qubits.

+ :ivar edges_specs: The specs associated with edges, or qubit-qubit pairs.

"""

- def f1QRBs(self):

+ qubits_specs: Sequence[QubitSpecs]

+ edges_specs: Sequence[EdgeSpecs]

+

+ def f1QRBs(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of single-qubit randomized benchmarking fidelities (for individual gate

operation, normalized to unity) from the specs, keyed by qubit index.

:return: A dictionary of 1Q RB fidelities, normalized to unity.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.f1QRB for qs in self.qubits_specs}

- def f1QRB_std_errs(self):

+ def f1QRB_std_errs(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of the standard errors of single-qubit randomized

benchmarking fidelities (for individual gate operation, normalized to unity)

from the specs, keyed by qubit index.

:return: A dictionary of 1Q RB fidelity standard errors, normalized to unity.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.f1QRB_std_err for qs in self.qubits_specs}

- def f1Q_simultaneous_RBs(self):

+ def f1Q_simultaneous_RBs(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of single-qubit randomized benchmarking fidelities (for simultaneous gate

operation across the chip, normalized to unity) from the specs, keyed by qubit index.

:return: A dictionary of simultaneous 1Q RB fidelities, normalized to unity.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.f1Q_simultaneous_RB for qs in self.qubits_specs}

- def f1Q_simultaneous_RB_std_errs(self):

+ def f1Q_simultaneous_RB_std_errs(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of the standard errors of single-qubit randomized

benchmarking fidelities (for simultaneous gate operation across the chip, normalized to

unity) from the specs, keyed by qubit index.

:return: A dictionary of simultaneous 1Q RB fidelity standard errors, normalized to unity.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.f1Q_simultaneous_RB_std_err for qs in self.qubits_specs}

- def fROs(self):

+ def fROs(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of single-qubit readout fidelities (normalized to unity)

from the specs, keyed by qubit index.

:return: A dictionary of RO fidelities, normalized to unity.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.fRO for qs in self.qubits_specs}

- def fActiveResets(self):

+ def fActiveResets(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of single-qubit active reset fidelities (normalized to unity) from the

specs, keyed by qubit index.

@@ -119,31 +120,28 @@ def fActiveResets(self):

"""

return {qs.id: qs.fActiveReset for qs in self.qubits_specs}

- def T1s(self):

+ def T1s(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of T1s (in seconds) from the specs, keyed by qubit index.

:return: A dictionary of T1s, in seconds.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.T1 for qs in self.qubits_specs}

- def T2s(self):

+ def T2s(self) -> Dict[int, Optional[float]]:

"""

Get a dictionary of T2s (in seconds) from the specs, keyed by qubit index.

:return: A dictionary of T2s, in seconds.

- :rtype: Dict[int, float]

"""

return {qs.id: qs.T2 for qs in self.qubits_specs}

- def fBellStates(self):

+ def fBellStates(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of two-qubit Bell state fidelities (normalized to unity)

from the specs, keyed by targets (qubit-qubit pairs).

:return: A dictionary of Bell state fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

warnings.warn(

DeprecationWarning(

@@ -153,73 +151,66 @@ def fBellStates(self):

)

return {tuple(es.targets): es.fBellState for es in self.edges_specs}

- def fCZs(self):

+ def fCZs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of CZ fidelities (normalized to unity) from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of CZ fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fCZ for es in self.edges_specs}

- def fISWAPs(self):

+ def fISWAPs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of ISWAP fidelities (normalized to unity) from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of ISWAP fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fISWAP for es in self.edges_specs}

- def fISWAP_std_errs(self):

+ def fISWAP_std_errs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of the standard errors of the ISWAP fidelities from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of ISWAP fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fISWAP_std_err for es in self.edges_specs}

- def fXYs(self):

+ def fXYs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of XY(pi) fidelities (normalized to unity) from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of XY/2 fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fXY for es in self.edges_specs}

- def fXY_std_errs(self):

+ def fXY_std_errs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of the standard errors of the XY fidelities from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of XY fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fXY_std_err for es in self.edges_specs}

- def fCZ_std_errs(self):

+ def fCZ_std_errs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of the standard errors of the CZ fidelities from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of CZ fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

return {tuple(es.targets): es.fCZ_std_err for es in self.edges_specs}

- def fCPHASEs(self):

+ def fCPHASEs(self) -> Dict[Tuple[int, ...], Optional[float]]:

"""

Get a dictionary of CPHASE fidelities (normalized to unity) from the specs,

keyed by targets (qubit-qubit pairs).

:return: A dictionary of CPHASE fidelities, normalized to unity.

- :rtype: Dict[tuple(int, int), float]

"""

warnings.warn(

DeprecationWarning(

@@ -229,7 +220,7 @@ def fCPHASEs(self):

)

return {tuple(es.targets): es.fCPHASE for es in self.edges_specs}

- def to_dict(self):

+ def to_dict(self) -> Dict[str, Any]:

"""

Create a JSON-serializable representation of the device Specs.

@@ -270,7 +261,6 @@ def to_dict(self):

}

:return: A dctionary representation of self.

- :rtype: Dict[str, Any]

"""

return {

"1Q": {

@@ -303,13 +293,12 @@ def to_dict(self):

}

@staticmethod

- def from_dict(d):

+ def from_dict(d: Dict[str, Any]) -> "Specs":

"""

Re-create the Specs from a dictionary representation.

- :param Dict[str, Any] d: The dictionary representation.

+ :param d: The dictionary representation.

:return: The restored Specs.

- :rtype: Specs

"""

return Specs(

qubits_specs=sorted(

@@ -332,7 +321,7 @@ def from_dict(d):

edges_specs=sorted(

[

EdgeSpecs(

- targets=[int(q) for q in e.split("-")],

+ targets=tuple(int(q) for q in e.split("-")),

fBellState=especs.get("fBellState"),

fCZ=especs.get("fCZ"),

fCZ_std_err=especs.get("fCZ_std_err"),

@@ -350,7 +339,7 @@ def from_dict(d):

)

-def specs_from_graph(graph: nx.Graph):

+def specs_from_graph(graph: nx.Graph) -> Specs:

"""

Generate a Specs object from a NetworkX graph with placeholder values for the actual specs.

|

diff --git a/pyquil/device/tests/test_device.py b/pyquil/device/tests/test_device.py

--- a/pyquil/device/tests/test_device.py

+++ b/pyquil/device/tests/test_device.py

@@ -55,10 +55,10 @@ def test_isa(isa_dict):

Qubit(id=3, type="Xhalves", dead=True),

],

edges=[

- Edge(targets=[0, 1], type="CZ", dead=False),

- Edge(targets=[0, 2], type="CPHASE", dead=False),

- Edge(targets=[0, 3], type="CZ", dead=True),

- Edge(targets=[1, 2], type="ISWAP", dead=False),

+ Edge(targets=(0, 1), type="CZ", dead=False),

+ Edge(targets=(0, 2), type="CPHASE", dead=False),

+ Edge(targets=(0, 3), type="CZ", dead=True),

+ Edge(targets=(1, 2), type="ISWAP", dead=False),

],

)

assert isa == ISA.from_dict(isa.to_dict())

@@ -115,7 +115,7 @@ def test_specs(specs_dict):

],

edges_specs=[

EdgeSpecs(

- targets=[0, 1],

+ targets=(0, 1),

fBellState=0.90,

fCZ=0.89,

fCZ_std_err=0.01,

@@ -127,7 +127,7 @@ def test_specs(specs_dict):

fCPHASE_std_err=None,

),

EdgeSpecs(

- targets=[0, 2],

+ targets=(0, 2),

fBellState=0.92,

fCZ=0.91,

fCZ_std_err=0.20,

@@ -139,7 +139,7 @@ def test_specs(specs_dict):

fCPHASE_std_err=None,

),

EdgeSpecs(

- targets=[0, 3],

+ targets=(0, 3),

fBellState=0.89,

fCZ=0.88,

fCZ_std_err=0.03,

@@ -151,7 +151,7 @@ def test_specs(specs_dict):

fCPHASE_std_err=None,

),

EdgeSpecs(

- targets=[1, 2],

+ targets=(1, 2),

fBellState=0.91,

fCZ=0.90,

fCZ_std_err=0.12,

diff --git a/pyquil/tests/test_quantum_computer.py b/pyquil/tests/test_quantum_computer.py

--- a/pyquil/tests/test_quantum_computer.py

+++ b/pyquil/tests/test_quantum_computer.py

@@ -194,8 +194,8 @@ def test_device_stuff():

assert nx.is_isomorphic(qc.qubit_topology(), topo)

isa = qc.get_isa(twoq_type="CPHASE")

- assert sorted(isa.edges)[0].type == "CPHASE"

- assert sorted(isa.edges)[0].targets == [0, 4]

+ assert isa.edges[0].type == "CPHASE"

+ assert isa.edges[0].targets == (0, 4)

def test_run(forest):

|

Change the namedtuples in device.py to dataclasses

As discussed in #961, using `dataclasses` instead of `namedtuples` would greatly improve readability, understanding, and use of the structures in the `device` module.

| 2020-01-02T19:58:40

|

python

|

Hard

|

|

pallets-eco/flask-wtf

| 512

|

pallets-eco__flask-wtf-512

|

[

"511"

] |

b86d5c6516344f85f930cdd710b14d54ac88415c

|

diff --git a/src/flask_wtf/__init__.py b/src/flask_wtf/__init__.py

--- a/src/flask_wtf/__init__.py

+++ b/src/flask_wtf/__init__.py

@@ -5,4 +5,4 @@

from .recaptcha import RecaptchaField

from .recaptcha import RecaptchaWidget

-__version__ = "1.0.0"

+__version__ = "1.0.1.dev0"

diff --git a/src/flask_wtf/form.py b/src/flask_wtf/form.py

--- a/src/flask_wtf/form.py

+++ b/src/flask_wtf/form.py

@@ -56,7 +56,7 @@ def wrap_formdata(self, form, formdata):

return CombinedMultiDict((request.files, request.form))

elif request.form:

return request.form

- elif request.get_json():

+ elif request.is_json:

return ImmutableMultiDict(request.get_json())

return None

diff --git a/src/flask_wtf/recaptcha/validators.py b/src/flask_wtf/recaptcha/validators.py

--- a/src/flask_wtf/recaptcha/validators.py

+++ b/src/flask_wtf/recaptcha/validators.py

@@ -30,7 +30,7 @@ def __call__(self, form, field):

if current_app.testing:

return True

- if request.json:

+ if request.is_json:

response = request.json.get("g-recaptcha-response", "")

else:

response = request.form.get("g-recaptcha-response", "")

|

diff --git a/tests/test_recaptcha.py b/tests/test_recaptcha.py

--- a/tests/test_recaptcha.py

+++ b/tests/test_recaptcha.py

@@ -80,7 +80,8 @@ def test_render_custom_args(app):

app.config["RECAPTCHA_DATA_ATTRS"] = {"red": "blue"}

f = RecaptchaForm()

render = f.recaptcha()

- assert "?key=%28value%29" in render

+ # new versions of url_encode allow more characters

+ assert "?key=(value)" in render or "?key=%28value%29" in render

assert 'data-red="blue"' in render

|

Update to Request.get_json() in Werkzeug 2.1.0 breaks empty forms

Similar to #510 - the get_json() change in Werkzeug 2.1.0 https://github.com/pallets/werkzeug/issues/2339 breaks any empty submitted form (not json).

From form.py:

```

def wrap_formdata(self, form, formdata):

if formdata is _Auto:

if _is_submitted():

if request.files:

return CombinedMultiDict((request.files, request.form))

elif request.form:

return request.form

elif request.get_json():

return ImmutableMultiDict(request.get_json())

```

If the form is an empty ImmutableMultiDict - it falls into the get_json() code which is then checking that the content-type header has been set to application/json.

Possible solution would be to change elif request.get_json() to elif request.is_json()

Expected Behavior:

Empty form submits should be allowed as they were. In the case of an empty form - None should be returned from the wrapper.

Environment:

- Python version: 3.8

- Flask-WTF version: 1.0.0

- Flask version: 2.1

| 2022-03-31T15:26:26

|

python

|

Easy

|

|

pytest-dev/pytest-django

| 979

|

pytest-dev__pytest-django-979

|

[

"978"

] |

b3b679f2cab9dad70e318f252751ff7659b951d1

|

diff --git a/pytest_django/fixtures.py b/pytest_django/fixtures.py

--- a/pytest_django/fixtures.py

+++ b/pytest_django/fixtures.py

@@ -167,7 +167,7 @@ def _django_db_helper(

serialized_rollback,

) = False, False, None, False

- transactional = transactional or (

+ transactional = transactional or reset_sequences or (

"transactional_db" in request.fixturenames

or "live_server" in request.fixturenames

)

|

diff --git a/tests/test_database.py b/tests/test_database.py

--- a/tests/test_database.py

+++ b/tests/test_database.py

@@ -287,11 +287,16 @@ def test_reset_sequences_disabled(self, request) -> None: