JT-DA-8B

🤖 JT-DA-8B

Introduction

In this work, we present JT-DA-8B, a specialized large language model designed for complex table reasoning tasks across diverse real-world scenarios. To address the lack of high-quality supervision in tabular reasoning scenarios, we construct a comprehensive and diverse training corpus by aggregating 29 public table QA datasets, over 300 domain-specific non-QA tables, and generating 1 million rule-based table reasoning QA pairs. A structured pipeline is proposed to generate realistic multi-step analytical tasks involving reasoning patterns like correlation, anomaly detection, and hypothesis testing. The model is trained upon open-sourced JT-Coder-8B model, an 8B-parameter decoder-only foundation model trained from scratch. In the training stage, we leverage LLM-based scoring and workflow-aligned filtering to distill high-quality, table-centric data. A four-stage table reasoning workflow is proposed, including table preprocessing, table sensing, tool-integrated reasoning, and prompt engineering, to improve model interpretability and execution accuracy. Experimental results show that JT-DA-8B achieves strong performance across various table tasks, demonstrating the effectiveness of data-centric generation and workflow-driven optimization.

Train Dataset

Public Data Collection

To address limitations in structured data question answering (QA), we adopt a multi-source data collection strategy spanning general language, table QA, and non-QA table data. General language datasets are obtained from platforms such as OpenCompass and GitHub using task-relevant keywords (e.g., “semantic understanding,” “code generation”), yielding ten representative benchmarks (e.g., MMLU, GSM8K). Table QA datasets are collected via keyword-based searches in academic databases, yielding 29 datasets (e.g., ToTTo, AIT-QA) spanning various QA tasks. To enhance domain coverage, we also collect non-QA table datasets across 300+ domains such as healthcare, finance, and education, targeting tasks like classification, forecasting, and anomaly detection.

Operation Data Generation

This method automatically generates about 1 million QA pairs from existing tables using generic rules, targeting three core skills: Table Basic Operation, Table Computational Operation, and Data Analysis. For the first two, it selects numeric tables excluding those with missing or summary data, then applies rule-based templates involving statistical computations, value queries, and filtering to create QA pairs. For Data Analysis, it selects tables with at least two numeric columns meeting computable conditions, constructs new tables per subtasks, and uses templates to generate QA pairs covering outlier detection, correlation analysis, and hypothesis testing.

Complex Data Analysis Task Generation

Real-world data analysis often demands multi-step reasoning over structured tables, yet most existing datasets are limited to simple or single-step tasks, hindering the development of models with advanced analytical capabilities. To address this limitation, we construct a diverse set of tasks that closely mimic realistic analytical workflows, aiming to enhance the model’s ability to perform step-by-step reasoning within the Chain-of-Thought (CoT) paradigm.

To support this goal, we design a structured pipeline for generating complex data analysis tasks over tabular data, integrating context construction, task specification, LLM-based generation, and dual-path evaluation. Given the tabular content and task definitions, we build rich structural, semantic, and instructional contexts to guide the LLM in producing multi-step reasoning chains. Each task is explicitly defined along four dimensions: question type, assumption setting, analytical content, and expected answer format. To ensure both quality and executability, the generated outputs are evaluated through textual critique (LLM Critic) and executable verification (Python Sandbox), forming a closed-loop system for robust and realistic task generation.

Data Summary

Finally, we construct the complete capability spectrum from fundamental language understanding to advanced data analysis, encompassing six core capabilities with 34 subtasks. The whole dataset undergoes deduplication, standardization, translation and rigorous quality control, including manual auditing and multi-model agreement checks to ensure practical applicability, including rigorous cleaning and validation to eliminate issues such as formatting errors, noisy data, and inaccuracies.

Based on the dataset, we generate three distinct data format for data training: TCoT, PoT, and ICoT. Each format is suitable for different table-reasoning scenarios. For example, TCoT is well-suited for text generation tasks, such as table summarization or descriptive analysis. PoT is highly effective for tasks where accuracy and computational precision are critical. ICoT dynamically adapts to intermediate results through self-reflection and strategy refinement, proving particularly effective for complex, multi-step tasks, such as Advanced Data Analysis Task.

Table 1 presents the summary statistics of the dataset.

| category | QA number | English QA | Chinese QA | TCoT number | POT number | ICoT number |

|---|---|---|---|---|---|---|

| Natural Language Understanding | 295406 | 230435 | 64971 | 156922 | 0 | 20044 |

| Table Understanding | 2498442 | 1325629 | 1327166 | 116008 | 78177 | 1758 |

| Table Basic Operation | 47692809 | 47692595 | 36603291 | 10988 | 102840 | 628 |

| Table Computational Operation | 17259294 | 17254644 | 15801983 | 41596 | 57272 | 4742 |

| Data Analysis | 233851 | 233851 | 233851 | 2662 | 30561 | 2622 |

| Advanced Data Analysis | 17565 | 17565 | 17565 | 0 | 17565 | 8970 |

Data Security

We prioritize data security. All data is strictly anonymized, free of personally identifiable information, and protected through encryption. Our practices comply with applicable data protection regulations to ensure safe and ethical use.

Model

Model Architecture

JT-DA-8B is built based on JT-Coder-8B model, a decoder-only LLM trained from scratch, comprising approximated 8 billion paramters. The model architecture follows the main-stream Transformer decoder design, including RMS layer normalization, RoPE, and GQA mechanism. Please see JT-Coder-8B for details of JT-Coder-8B model.

Training Infrastructure

The model is trained on our self-developed Jiutian LLM Development Platform , which is specifically designed to support large-scale distributed training for deep learning models. A total of 96 NPUs are utilized in the training stage, equipped with a high-bandwidth, low-latency communication network and a shared high-performance storage. The training pipeline is implemented using a distributed parallel strategy, including data parallelism and model parallelism to ensure efficient training.

Training Data Composition

The training data is composed of a diverse and task-oriented mixture of data types, with an emphasis on table understanding and reasoning tasks. In order to improve the model quality, the dataset is extensively cleaned and processed:

- Short answer filtering: In particular, samples with overly short answers are filtered out to ensure richer training signals.

- Repetive thinking filtering: Samples with repetitive substrings in thinking process are filtered out via a rolling hash-based method.

- Low information density filtering: Samples with too many verbose and redundant words are filtered out.

- LLM-based scoring: A LLM is used to assess the quality of question-answer pairs on a scale of 0-10 based on criteria including correctness, completeness, clarity and safety. Samples with scores lower than 8.5 are filtered out.

After sample filtering, different categories of samples are balanced in propotion to ensure the model can get fully trained in every tasks.

| Category | Percentage |

|---|---|

| Natural Language Understanding | 23.65% |

| Table Understanding | 24.84% |

| Table Basic Operation | 31.16% |

| Table Computational Operation | 15.77% |

| Data Analysis | 3.99% |

| Advanced Data Analysis | 0.59% |

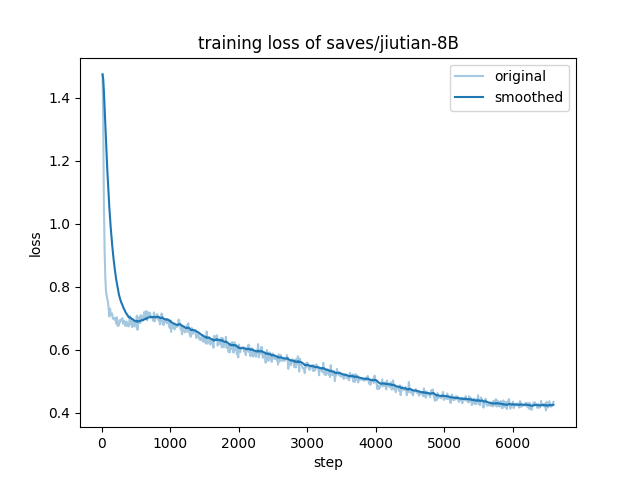

Reasoning SFT

To enhance the model's capability in structured table reasoning, we conduct Supervised Fine-Tuning (SFT) specifically focused on reasoning tasks over tabular data. In the SFT stage, we adopt a standard teacher-forcing approach where the model is trained to predict the target output given the full context. The objective is to minimize the cross-entropy loss between the predicted and ground-truth tokens. We apply this SFT process to JT-Coder-8B model.

Table Reasoning Workflow

In order to fully leverage the reasoning capabilities of the trained model in tabular data scenarios, a structured data analysis-oriented workflow is proposed in this work. The workflow consists of 4 core components: table preprocessing, table sensing, tool-integrated reasoning and prompt engineering. These components form an end-to-end pipeline designed to enhance the model's ability to understand and reason over tabular datasets.

Table Preprocessing

Before table analysis and reasoning, a table preprocessing is adopted in our workflow such that the input table gets clean, structured and properly formatted. Table preprocessing involves handling missing values, splitting merged cells, standardizing column headers, and identifying column headers. After the preprocessing, the table is transformed into a normalized structure that can facilitate downstream table understanding and reasoning by the model. Moreover, the structured format reduces ambiguity in table layout and ensures consistent alignment between natural language queries and the corresponding data fields.

Table Sensing

Table sensing refers to the model’s contextual understanding of a table’s structure, semantics, and relationships. In this stage, column headers and sample rows of each table are provided to the model. During the table sensing stage, the model identifies the types of each column (e.g., categorical, numerical, textual), infers potential relationships among columns, and detects any implicit hierarchies or grouping patterns. It also involves the understanding of header semantics, including the disambiguation of abbreviations, units, and domain-specific terminology. By observing sample rows, the model gains insight into typical value ranges, formats, and anomalies, enabling it to develop a robust “sense” of the data context.

Tool-Integrated Reasoning

To simulate the interactive process between humans and structured data, the integration of tool, particularly code sandboxes, is essential for effective table analysis and reasoning. The integration of sandboxes can effectively alleviate the hallucination problem of LLM in numerical calculations. Moreover, by interacting with the sandboxes, LLM can gradually form a clear understanding of tables so as to improve the performance of table reasoning. Finally, the interaction between LLMs and sandboxes improves the Interpretability and reliability.

Prompt Engineering

Prompt engineering plays a critical role in guiding the model through multi-step table reasoning processes. To address the inherent challenges in tabular data understanding and reasoning, we propose a prompt optimization strategy specifically designed for table analysis. This strategy focuses on enhancing the model’s contextual understanding and reasoning accuracy by decomposing the task into interpretable components. The core objectives of the prompt design consists of understanding table structure, understanding problem statement, interpreting column semantics, identifying special values and formatting artifacts and preliminary verification.

Evaluation

Inference Example

- Install requirements

pip install torch transformers accelerate

- Run Here is an example Python script for inference using the JT-DA-8B model.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_path = "JT-LM/JT-DA-8B"

device = "cuda" if torch.cuda.is_available() else "cpu"

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True

)

model.eval()

messages = [

{"role": "user", "content": "Please generate a table and draw a bar chart"},

]

inputs = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(device)

generation_params = {

"max_new_tokens": 1024,

"do_sample": True,

"temperature": 0.7,

"top_p": 0.95,

"top_k": 50,

}

with torch.no_grad():

outputs = model.generate(inputs, **generation_params)

response = tokenizer.decode(outputs[0][inputs.shape[-1]:],

skip_special_tokens=True)

print("--- User Query ---")

print(messages[0]['content'])

print("\n--- Model Response ---")

print(response)

Open Benchmark

The model evaluation is based on an open-source benchmark: TReB. It is a reliable and comprehensive benchmark that offers assessment of LLM capabilities for table reasoning. It covers:

- An open-source dataset combining cleaned public benchmarks, real-world web tables, and proprietary data, covering six core capabilities and 26 subtasks to support diverse table reasoning evaluations.

- An open-source framework code specifically designed to evaluate LLM performance on table reasoning tasks. It integrates diverse inference modes and reliable metrics, enabling precise and multi-dimensional evaluations.

The test datasets (only including table reasoning tasks) and inference modes are introduced as follows:

Test Dataset

| Skill | Description |

|---|---|

| Table Understanding (TU) | Focuses on parsing structures and comprehending content in tabular data. Evaluates table structure identification, key information retrieval, summary accuracy, and logical reasoning based on tabular facts. |

| Table Basic Operation (TBO) | Evaluates the ability to convert natural language intents into executable instructions for basic structured data operations. Covers query comprehension, field localization, and condition parsing. |

| Table Computational Operation (TCO) | Focuses on performing complex computational tasks in structured data scenarios. Evaluates mathematical expression comprehension, function selection, domain-specific computation, and complex relationship parsing. |

| Data Analysis (DA) | Measures basic statistical analysis and pattern recognition in structured data. Evaluates statistical metric calculation, variable relationship modeling, and result interpretation, reflecting data insight capabilities. |

| Advanced Data Analysis (ADA) | Handles high-complexity, multi-step (≥3 steps) data analysis tasks. Evaluates multi-stage information fusion, logical path planning, and cross-task reasoning, enabling structured data-driven complex problem solving and decision support. |

Inference modes

| Mode | Description |

|---|---|

| Textual Chain-of-Thought (TCoT) | TCoT is a reasoning mode in which LLMs solve data analysis problems step by step through pure textual reasoning. The final answer is output exclusively in text form. |

| Program-of-Thought (PoT) | PoT is a reasoning mode in which LLMs solve data analysis problems by generating executable code. In this mode, the model combines textual reasoning with programmatic outputs and ultimately producing a code block as its final answer. |

| Interleaved Chain-of-Thought (ICoT) | The ICoT mode enables models to perform multi-step reasoning by combining textual explanations and programmatic outputs in an iterative process. This advanced mode integrates planning, step-by-step execution, and self-reflection, allowing the model to dynamically adapt its reasoning based on intermediate results. |

We adopt the LLM-as-a-Judge method, consistent with the settings in the benchmark, to evaluate our model.

Experiment Results

The evaluation results demonstrate that the JIUTIAN-8B model excels among models of the same size, showcasing outstanding performance in table understanding, querying, computation, and data analysis tasks.

Case Study

In this section, we present two cases to demonstrate the powerful data analysis capabilities of JIUTIAN-8B.

Case1: Complex Data Analysis

This case comprehensively evaluates the model's capabilities in table and question understanding, data query pre-screening, and data computation. Additionally, the model must handle scenarios involving inconsistent and noisy data types in the dataset.

Question: What is the average annual growth rate for snap from 2016 to 2018?

Model Answer:

To calculate the average annual growth rate for Snap from 2016 to 2018, we need to first extract the relevant data points from the DataFrame. Then, we can compute the growth rates between consecutive years and finally find their average.

Here's the step-by-step process:

1. Extract the relevant data points for Snap from 2016 to 2018.

2. Compute the growth rates between consecutive years.

3. Calculate the average of these growth rates.

Let's implement this in Python:

```python

# Convert the 'snap-onincorporated' column to numeric values

df['snap-onincorporated'] = df['snap-onincorporated'].replace('[\$,]', '', regex=True).astype(float)

# Extract the relevant data points for Snap from 2016 to 2018

snap_2016 = df.loc[df['fiscal year ended ( 1 )'] == 'december 31 2016', 'snap-onincorporated'].values[0]

snap_2017 = df.loc[df['fiscal year ended ( 1 )'] == 'december 31 2017', 'snap-onincorporated'].values[0]

snap_2018 = df.loc[df['fiscal year ended ( 1 )'] == 'december 31 2018', 'snap-onincorporated'].values[0]

# Compute the growth rates between consecutive years

growth_rate_2016_to_2017 = (snap_2017 - snap_2016) / snap_2016 * 100

growth_rate_2017_to_2018 = (snap_2018 - snap_2017) / snap_2017 * 100

# Calculate the average of these growth rates

average_growth_rate = (growth_rate_2016_to_2017 + growth_rate_2017_to_2018) / 2

print(f"The average annual growth rate for Snap from 2016 to 2018 is {average_growth_rate:.2f}%")

```

```CodeExecuteResult

The average annual growth rate for Snap from 2016 to 2018 is -5.60%

```

So, the average annual growth rate for Snap from 2016 to 2018 is approximately -5.60%.

Case2: Visualization and Analysis

This case demonstrates the model's capability in data visualization (with a chart tool) and analysis.

Question:According to the table, draw a bar chart to illustrate the highest temperatures recorded in degrees Celsius.

Model Answer:

To address your request, I'll follow these steps:

1. Identify the correct row containing maximum temperature records

2. Parse Celsius values from formatted strings

3. Create a bar chart showing monthly comparisons

Here's the implementation:

```python

import pandas as pd

import re

# Locate target row

record_high_row = df[df['Month'] == 'Record high °F (°C)'].iloc[0]

# Process temperature data conversion

month_labels = record_high_row.index[1:-1].tolist() # Exclude 'Month' & 'Year'

def parse_celsius(value):

cleaned = str(value).strip('\"\n ')

match = re.search(r'\(([\d.-]*)\)', cleaned)

return float(match.group(1)) if match else None

temperatures = [parse_celsius(record_high_row[m]) for m in month_labels]

# Format data structure for plotting

chart_data = {

"categories": month_labels,

"series": [{

"name": "Max Recorded Temperature",

"data": temperatures

}]

}

# Configuration settings

config = {

"title": {"title": "Monthly Historical High Temperatures"},

"xaxis": {"name": "Month"},

"yaxis": {"name": "Temperature (°C)"},

"tooltip": {"trigger": "item"},

"grid": {"top": "20%", "containLabel": True}

}

# Render visualization

chart_creator = ChartTool()

visualization_path = chart_creator.create(

chart_type="bar",

data=chart_data,

global_opts=config,

render_path=f"./historical_max_temperatures_{pd.Timestamp.now():%Y%m%d}.html"

)

print(f"Highest temperatures successfully plotted! View report at:")

print(visualization_path)

```

```CodeExecuteResult

Highest temperatures successfully plotted! View report at:

./pic/historical_max_temperatures_20250723.html

```

This script generates an interactive bar chart displaying the historically recorded peak temperatures for every calendar month. Key features include:

- Clear axis labeling with proper units

- Automatic tooltip displays

- Responsive design suitable for web viewing

- Unique filename generation to prevent overwrites

The resulting HTML document contains embedded JavaScript rendering powered by PyEcharts framework. You can open this file in modern browsers like Chrome/Firefox/Safari to interact with zoom capabilities and hover effects.

License

JT-DA-8B is distributed under the terms of the Apache 2.0 license.

Disclaimer

JT-DA-8B is a large language model. Despite extensive data curation and training efforts, it may still produce content that is inaccurate, biased, or inappropriate. Users are advised to exercise discretion when interpreting the model’s outputs and assume full responsibility for any outcomes arising from its use.

- Downloads last month

- 13