Model Card for PRInTS (Process Reward via Information gain scoring and Trajectory Summarization) Qwen3-4B

This repository hosts the PRInTS (Process Reward via Information gain scoring and Trajectory Summarization) Qwen3-4B model. PRInTS is a generative process reward model for long-horizon information-seeking tasks.

Model Details

Model Description

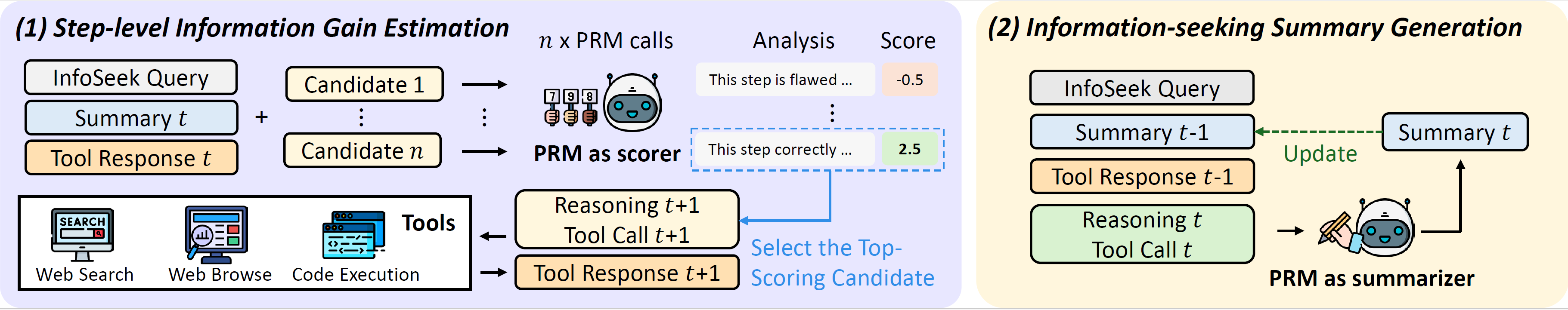

PRInTS (Process Reward via Information gain scoring and Trajectory Summary) is a generative PRM jointly trained with two key abilities for fine-grained guidance under the challenge of context accumulation.

Key Highlights:

- 🎯 PRInTS as a scorer: evaluates agent's multiple candidate next trajectory steps based on the summarized context and current tool response, and outputs dense scores based on the PRM's reasoning across multiple step quality dimensions (e.g., interpretation of tool outputs, tool call informativeness)

- 📝 PRInTS as a summarizer: recursively updates a compact information-seeking trajectory summary to keep input length bounded and preserve key information for its subsequent score evaluation.

- Developed by: Jaewoo Lee, Archiki Prasad, Justin Chih-Yao Chen, Zaid Khan, Elias Stengel-Eskin, Mohit Bansal

- Model type:

Qwen3ForCausalLM, fine-tuned Large Language Model - Language(s) (NLP): English

- License: MIT

- Finetuned from model: Qwen3-4B

Model Sources

- Repository: https://github.com/G-JWLee/PRInTS

- Paper: PRInTS: Reward Modeling for Long-Horizon Information Seeking

Overview of PRInTS

Uses

Test-time scaling

The PRInTS (Qwen3-4B) model provides fine-grained guidance for information-seeking agents at test time, estimating step-level information-gain scores across n rollouts of the agents.

Citation

If you find this work useful, please consider citing us:

@article{lee2024prints,

title={PRInTS: Reward Modeling for Long-Horizon Information Seeking},

author={Jaewoo Lee and Archiki Prasad and Justin Chih-Yao Chen and Zaid Khan and Elias Stengel-Eskin and Mohit Bansal},

year={2025},

journal={arXiv preprint arXiv:2511.19314},

url={https://arxiv.org/abs/2511.19314},

}

- Downloads last month

- 5