SaSaSa2VA: Segmentation Augmented and Selective Averaged Sa2VA

[📜 arXiv] [🎥 YouTube] [🧑💻 GitHub] [🤗 HuggingFace] [🎯 Challenge]

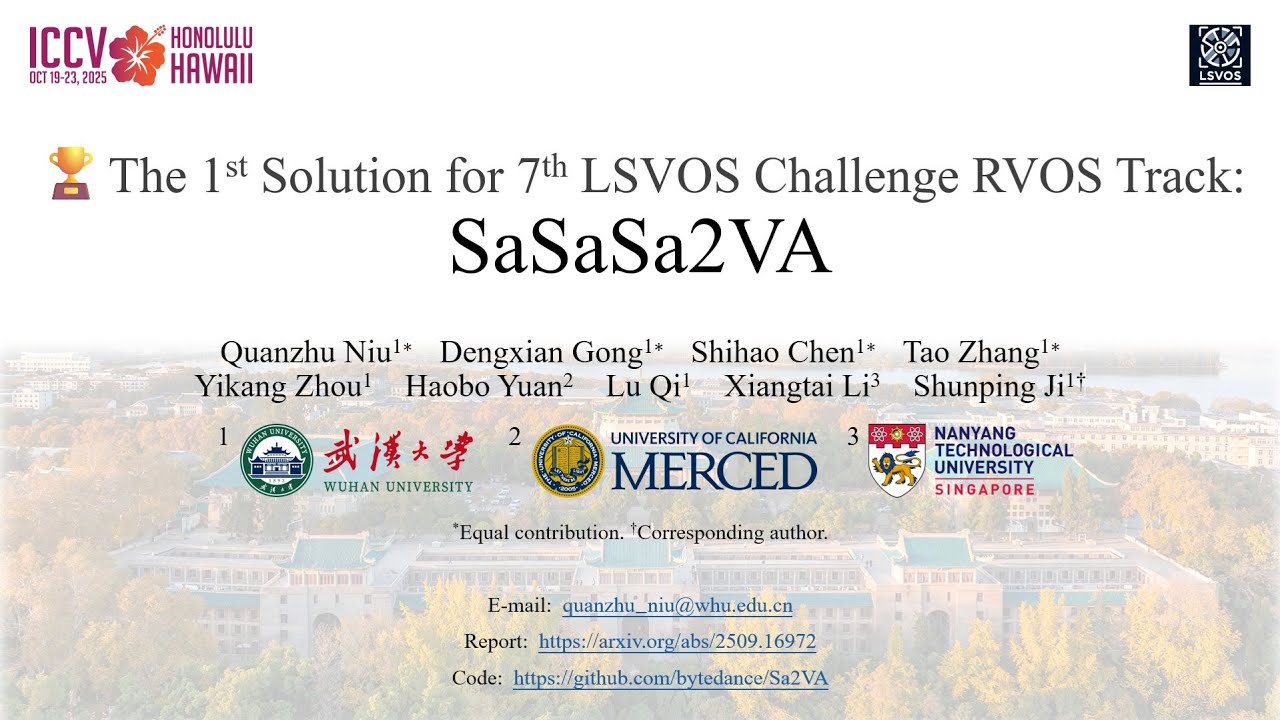

Quanzhu Niu1* · Dengxian Gong1* · Shihao Chen1* · Tao Zhang1* · Yikang Zhou1 · Haobo Yuan2 · Lu Qi1 · Xiangtai Li3 · Shunping Ji1†

1WHU 2UC Merced 3NTU

*equal contribution †corresponding author

🎉 1st Place in ICCV 2025 LSVOS Challenge RVOS Track! 🎉

We win 1st place in ICCV 2025 LSVOS (Large-scale Video Object Segmentation) challenge RVOS (Referring Video Object Segmentation) track. The top 3 teams' methods are all based on Sa2VA. The challenge leaderborad:

| Method/Team Name | J&F | Report |

|---|---|---|

| 🏅 SaSaSa2VA (Ours) | 67.45 | 📜 arXiv |

| 🥈 Transsion | 64.65 | 📜 arXiv |

| 🥉 dytino | 64.14 | 📜 arXiv |

Please check out the full report of the challenge here.

Our report video in ICCV 2025 has been released!

⬇️ Please click the teaser to watch the video.

Model Zoo

We provide the following models:

| Model Name | Base MLLM | HF Link |

|---|---|---|

| SaSaSa2VA-4B | InternVL2.5-4B | 🤗 link |

| SaSaSa2VA-14B | InternVL3.5-14B | To be released |

| SaSaSa2VA-26B | InternVL2.5-26B | 🤗 link |

Usage

Installation and Data Preparation

Please refer to README.md for installation and data preparation.

- If you use

torch>=2.6.0, there may be a problem about loading pth weight withxtuner==0.1.23. We recommend usingtransformers==4.42.3for 4B/26B andtransformers==4.52.1for 14B. - If you use q_frame inference mode, you should generate frame indices by Q-frame or download from 🤗 Huggingface. Then place them in

data/mevis_q_frame/valid/selected_frames.jsonanddata/mevis_q_frame/valid_u/selected_frames.json.

Training

You can train SaSaSa2VA with:

bash tools/dist.sh train projects/sasasa2va/configs/YOUR_CONFIG NUM_GPUS

- Note: SaSaSa2VA uses pretrained weights of Sa2VA. Please put Sa2VA pretrained pth weights to

./pretrained/Sa2VA_pth/.

Then run the following command to convert pth model to Huggingface format model:

python projects/sasasa2va/hf/convert_to_hf.py projects/sasasa2va/configs/YOUR_CONFIG --pth-model PATH_TO_PTH_MODEL --save-path PATH_TO_HF_MODEL

Evaluation

You can evaluate SaSaSa2VA on MeViS valid (MEVIS) and valid_u (MEVIS_U) splits.

For inference:

projects/sasasa2va/evaluation/dist_test.sh projects/sasasa2va/evaluation/ref_vos_eval.py PATH_TO_HF_MODEL NUM_GPUS --dataset SPLIT --work-dir PATH_TO_OUTPUT --mode INFERENCE_MODE [--submit]

- We provide 5 inference modes: uniform(default), uniform_plus, q_frame, wrap_around, wrap_around_plus. If you use q_frame mode, please prepare q_frame indices in

data/mevis_q_frame/valid/selected_frames.jsonanddata/mevis_q_frame/valid_u/selected_frames.json. - If you turn on

--submit, the outputs will be .png format masks for MeViS valid server. - You can use

tools/llava_sam2_eval/eval_mevis.pyto compute metrics on MeViS valid_u split.

Citation

If you find our work useful, please consider referring to the challenge report:

@article{sasasa2va,

title={The 1st Solution for 7th LSVOS RVOS Track: {SaSaSa2VA}},

author={Niu, Quanzhu and Gong, Dengxian and Chen, Shihao and Zhang, Tao and Zhou, Yikang and Yuan, Haobo and Qi, Lu and Li, Xiangtai and Ji, Shunping},

journal={arXiv preprint arXiv:2509.16972},

year={2025}

}

@article{liu2025lsvos,

title={LSVOS 2025 Challenge Report: Recent Advances in Complex Video Object Segmentation},

author={Chang Liu and Henghui Ding and Kaining Ying and Lingyi Hong and Ning Xu and Linjie Yang and Yuchen Fan and Mingqi Gao and Jingkun Chen and Yunqi Miao and Gengshen Wu and Zhijin Qin and Jungong Han and Zhixiong Zhang and Shuangrui Ding and Xiaoyi Dong and Yuhang Zang and Yuhang Cao and Jiaqi Wang and Chang Soo Lim and Joonyoung Moon and Donghyeon Cho and Tingmin Li and Yixuan Li and Yang Yang and An Yan and Leilei Cao and Feng Lu and Ran Hong and Youhai Jiang and Fengjie Zhu and Yujie Xie and Hongyang Zhang and Zhihui Liu and Shihai Ruan and Quanzhu Niu and Dengxian Gong and Shihao Chen and Tao Zhang and Yikang Zhou and Haobo Yuan and Lu Qi and Xiangtai Li and Shunping Ji and Ran Hong and Feng Lu and Leilei Cao and An Yan and Alexey Nekrasov and Ali Athar and Daan de Geus and Alexander Hermans and Bastian Leibe},

journal={arXiv preprint arXiv:2510.11063},

year={2025}

}

- Downloads last month

- 13